In 2023, my team and I began working on perhaps one of the most ambitious content audits ever conducted on the HubSpot Blog. We’ve run content audits in the past — but not like this.

We ran the audit in three phases:

- Phase 1 addressed our oldest content.

- Phase 2 evaluated our lowest-performing content.

- Phase 3 assessed the value of our topic clusters.

When it was all said and done, we audited over 10,000 blog post URLs and over 450 topic clusters.

In this post, I’m going to focus on phase one of our audit. I’ll walk you through how we audited our oldest content and how we took action. Plus, I’ll share the results we found.

But first, let me give you some background on why we decided to run an audit of this magnitude.

Why We Audited

It all started in early 2023. At the time, my team was called the Historical Optimization team and we sat at the intersection of HubSpot’s SEO and Blog teams.

We were responsible for updating and optimizing our existing blog posts and finding growth opportunities within our library. (We’ve since evolved into what is now the EN Blog Strategy team.)

In case you’re new here, the HubSpot Blog is HUGE.

For context, the blog was home to 13,822 pages in February of 2023, the month we began our audit. Ahrefs’ Mateusz Makosiewicz even declared it as the “biggest corporate blog … ever” in an SEO case study earlier this year.

Image Source

While we are fortunate to have a high domain authority and drive millions of visits per month, having a blog of this size does not come without challenges.

As our library ages, the amount of opportunity for new content across our blog properties and clusters shrinks.

So, we decided to audit our library to find opportunities for optimization.

We hypothesized that we could uncover “greenspace” and “quasi-greenspace” — topics that we have covered but haven’t capitalized on that well — by auditing the oldest 4,000 URLs in our library.

Although this was only about a third of our content library, we believed we’d be able to unearth some traffic opportunities and give our blog a boost.

Around the same time, we started to feel the effects of Google’s March 2023 Core Update that emphasized experience, which our Technical SEO team immediately started addressing.

However, another part of that algorithm update emphasized content freshness and helpfulness. In other words, how cutting-edge and useful our content is to our readers.

This is where we really felt a sense of urgency.

Because we had 4,000 URLs with published dates ranging from 2006 to 2015, we already knew that this chunk of content was not fresh or helpful.

So, we got to work and audited those blog posts over the course of ten weeks.

Eventually, we added phases two and three to our plan so we could further address unhelpful content and clusters.

How We Audited Our Oldest Content

1. Define our goals.

Before we started auditing the content, it was important for us to determine the objectives.

For some publishers, the goal of a content audit may include improving on-page SEO, enhancing user engagement, aligning content with marketing goals, or identifying content gaps.

For this particular audit, it meant uncovering “greenspace” and “quasi-greenspace” in our blog library, and improving our overall content freshness.

We also had to determine the scope of our audit.

There’s no right or wrong way to approach this. Depending on your goals and the size of your website, you could audit the whole thing in one go.

You could also start with a small portion of your site (such as product pages or specific topic clusters) and build out from there.

Since HubSpot has such a large content library, we opted to limit this audit to our oldest 4,000 URLs. Not only was this more manageable than reviewing all of our content in one audit, but this also targeted URLs that were more likely to benefit from an update or prune.

We also did this knowing that we would later address the rest of our library during phases two and three.

2. Gather our content inventory.

Once we established our goals and scope, we had to gather the oldest 4,000 blog posts and put them into a spreadsheet.

This process can vary depending on the tools and CMS you use. Here’s how we did it using Content Hub:

1. Log into HubSpot and navigate to the Blog page in Content Hub.

2. Navigate to the Actions drop-down and click Export blog posts.

3. Select File format and click Export. This will send all of your blog post information to your email. You will also get a notification in HubSpot once your export is ready.

4. Download your export and open it in your preferred spreadsheet software (I’m usually a Google Sheets girlie, but I had to use Microsoft Excel since the file was so large).

5. Review each column in the spreadsheet and delete the ones that are not relevant to your audit. We immediately deleted the following:

- Post SEO title

- Meta description

- Last modified date

- Post body

- Featured image URL

- Head HTML

- Archived

6. Once the irrelevant columns were removed, the following remained:

- Blog name

- Post title

- Tags

- Post language

- Post URL

- Author

- Publish date

- Status

7. Filter the Post language column for EN posts only. Delete the column once the sheet is filtered.

8. Filter the Status column for PUBLISHED only. Delete the column once the sheet is filtered.

9. Filter the sheet by Publish date from oldest to newest.

10. Highlight and copy the first 4,000 rows and paste them into a separate spreadsheet.

11. Name the new spreadsheet Content Audit Master.

If you’re feeling fancy, you can also create a custom report in Content Hub and select only the fields you want included in the audit so you don’t have to filter as much when setting up your spreadsheet.

3. Retrieve the data.

After compiling all of the content needed for our audit, we had to collect relevant data for each blog post.

For this audit, we kept it pretty simple, only analyzing total organic traffic from the previous calendar year, total backlinks, and total keywords.

We did this because our recommended actions for each URL were determined during post-evaluation. (We’ll cover this in the next step.)

We obtained organic traffic data from Google Search Console and used a VLOOKUP to match each URL with its corresponding number of Clicks.

Then, we got backlink and keyword data by copying and pasting our audit URLs into Ahrefs’ Batch Analysis tool and exporting the data into our spreadsheet.

At the time of our audit, the Batch Analysis tool could only analyze up to 200 URLs at once, so we had to repeat this step 20 times until we had data for each URL.

Luckily, Ahrefs has rolled out a Batch Analysis 2.0 tool since then, which can analyze up to 1,000 URLs at once. So, if we were to do a similar audit in the future, it would take much less time to retrieve this data.

4. Evaluate the content.

Next, we assessed each piece of content by using the collected data. Then, we evaluated the post itself to determine the following:

- Type of Content

- Freshness Level

- Organic Potential

Type of Content

The HubSpot Blog is home to many different types of blog posts, each serving a unique purpose. Labeling each post helped us determine its relevance and became a key factor in our decision to update or prune.

While this isn’t an exhaustive list of all the content types you could find on the HubSpot Blog, we narrowed it down to the following for the purposes of this audit:

- Educational: A topic that can educate the user on a pain point or problem they know they have.

- Thought Leadership: A topic that can educate the user on a pain point or problem they didn’t know they had until an expert drew it to their attention.

- Business Update: A HubSpot-related piece of news or a press release that is likely not evergreen.

- Newsjacking: An industry-related piece of news or a press release that is likely not evergreen.

- Research: A collection of data or the results of an experiment that is used to educate the reader. This topic may or may not be evergreen, but the content is not and needs updates to stay fresh.

Freshness Level

Because the posts in this audit hadn’t been updated in a long time, none of them could be considered 100% “fresh.” However, we took different types of freshness into account when determining what action needed to be taken on the URLs.

For example, some topics, such as Google+, are so outdated that an update would be silly. However, plenty of topics were still evergreen, even though our content was not.

The following scale helped us make decisions on whether the URL had value with regard to freshness:

- Outdated: The topic is outdated, and an update may not be possible.

- Stale: The topic is evergreen, but it would need an extensive update to make the content more competitive.

- Relatively Fresh: The topic is evergreen, and it would only need a moderate update to make the content competitive.

Organic Potential

To determine the organic potential of each URL, we had to ask ourselves the following question: Will anyone search for the content on Google?

- Yes: Someone would definitely search for this, so we’ll need to optimize/recycle the content.

- No: Someone would not search for this. There’s no point in optimizing/recycling the content since there’s no possible focus keyword.

For all of the posts marked “Yes” for organic potential, we recommended a focus keyword for the re-optimized content to compete for. We did this by evaluating the existing title, slug, and content. Then, we did some keyword research on Ahrefs and reviewed the Google SERP for that query.

We also included the focus keyword’s monthly search volume (MSV) to help prioritize which updates to perform first. We did this by plugging the recommended keyword into Ahrefs’ Keywords Explorer and adding the MSV to our master sheet.

For an extra layer of caution, we also checked for cannibalization on all posts marked “Yes” for organic potential. There are a few ways to do this:

- Do a site search and see if any URLs come up for the focus keyword.

- Plug the focus keyword into Google Search Console to see if any URLs come up.

- Plug the focus keyword into Ahrefs’ Keyword Explorer, scroll down to Position History, search your domain name, and filter for Top 20 and your desired time frame (I usually check the last six months). If multiple URLs are found, that may indicate cannibalization.

If the focus keyword was flagged for cannibalization, we either found a different focus keyword or noted that the URL should be redirected to the fresher post.

If no cannibalization was found, then we had the green light to move forward with updating the post.

5. Recommend an action.

Once a post was completely evaluated, we turned the insights into action items.

Each URL was placed into one of the following categories:

- Keep: No action is needed because both the content and the URL are good.

- Optimize: The content is good but outdated in terms of freshness or SEO practices. Keep the spirit of the article, but refresh and re-optimize to improve performance.

- Recycle: The content is not salvageable, but the URL still has value (in terms of backlinks or keyword opportunity). Create new content from scratch, but retain the URL.

- Prune: Neither the content nor the URL has value from an organic standpoint.

Audit Insights

Out of the 4,000 URLs we audited, 951 (23.78%) were categorized as posts with organic potential and recommended for optimization or recycling. Additionally, 2,888 URLs were recommended to be pruned. That’s about 72.2% of the audit.

These posts either did not have organic potential, posed a cannibalization risk, or were so outdated that there was no point in updating them.

The remaining 161 URLs either did not require any action or had already been redirected.

How We Took Action

The action taken for a URL was determined by its potential for organic traffic.

The URLs with organic potential were delivered to our Blog team and recommended to be optimized or recycled.

Meanwhile, the URLs with no organic potential were delivered to our SEO team and recommended to be archived or redirected.

First, let’s walk through how we took action on the posts recommended to be optimized or recycled.

Taking Action on Content with Organic Potential

Before addressing any of the 951 posts with organic potential, we needed to figure out the following:

- Our capacity for strategic analysis and brief writing

- The capacity of our in-house writing staff and available freelancers

- Our capacity to edit the updates

We coordinated with stakeholders and determined we only had the bandwidth to update 240 posts in 2024 (in addition to the dozens of blog posts we update each month). This initiative was internally known as the “De-Age the Blog Project” and was led by my EN Blog Strategy teammate Kimberly Turner.

Once we knew how many posts we could take on, we had to narrow down which ones to prioritize. We did this by evaluating the complexity of the lift required for each post update:

- Simple Update: The content updates needed are relatively light, making them suitable for freelancers.

- Complex Update: The content updates needed are heavy, making them better suited for in-house writers.

- Recycle: Content is not salvageable, but the URL is. Rewrite the post from scratch, but retain the URL.

- No Opportunity: Pass on updating.

We originally prioritized updating the simplest URLs first, but later pivoted our strategy to tackle the URLs with the highest MSV potential, regardless of update complexity.

We did this because we wanted to get the most we could out of our updates.

De-Age the Blog Results

Initially, we projected that these updates would be complete by the end of H1 2024, but we had to shift our strategy … again.

Like many other publishers, we felt the effects of Google’s March 2024 Core Update as well as the introduction of AI Overviews.

After having placed the De-Age the Blog Project on hold while we addressed the issues, we deprioritized the project entirely in favor of higher-impact workstreams.

SEO, am I right? It always keeps you on your toes.

Despite sunsetting the project before it was complete, we were still able to perform 76 post updates. Six months after the updates were implemented, the cumulative monthly traffic for these posts had increased by 458%.

This goes to show that even updating a small portion of URLs can make a big difference.

Taking Action on Content with No Organic Potential

While the De-Age the Blog Project was taking place, we also took action on the 2,888 URLs that were recommended to be pruned.

Since the initial audit didn’t include recommendations on how to prune, we had to go back and re-review each URL to determine how we would prune.

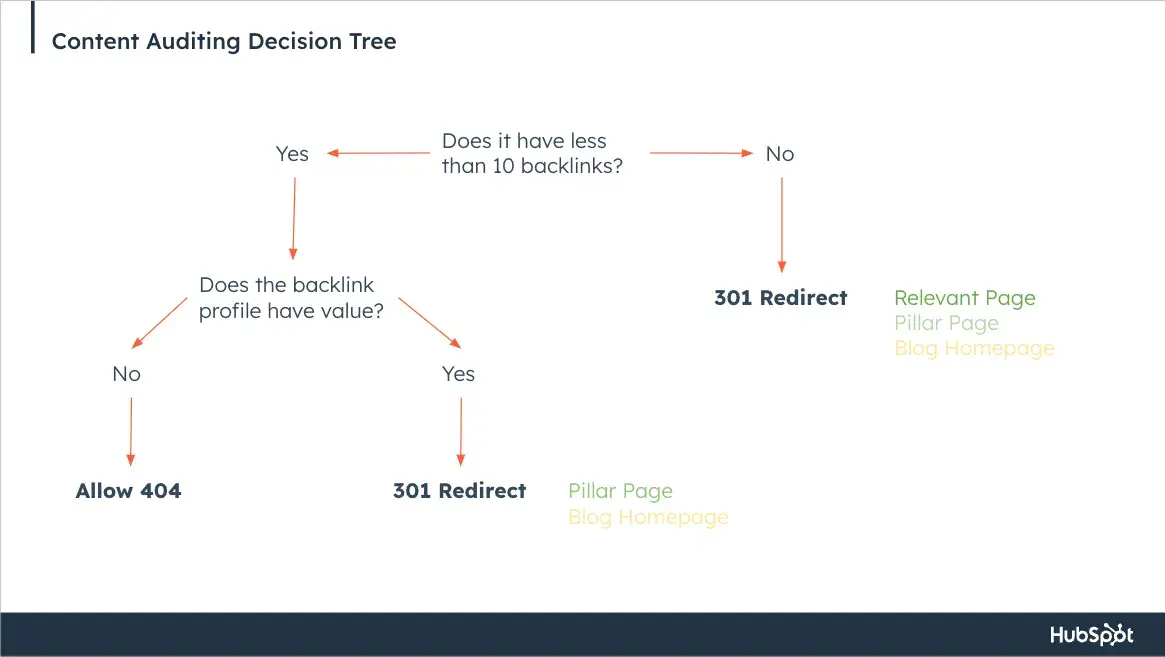

Here’s how we evaluated the posts:

- Archive (404): The URL has less than 10 backlinks and the backlink profile does not have value.

- Redirect (301): The URL has more than 10 backlinks and/or the backlink profile has value.

How exactly did we determine the backlink profile value? Rory Hope, HubSpot’s head of SEO, recommended we follow these steps:

1. Login into Ahrefs and submit the URL into Ahrefs’ Site Explorer search bar.

2. Select Overview from the left-hand sidebar.

3. Scroll down and click Backlink Profile.

4. Scroll down further and select By DR under Referring Domains.

5. Analyze and investigate any referring domains that are> 50.

6. Navigate to the Referring Domain you’re investigating> 50 by clicking the number.

7. Analyze the Referring page.

Select Redirect (301) if:

- The Referring page link is from a domain that still receives Domain traffic.

Select Archive (404) if:

- The Referring page link appears to be “spammy.” You can determine this by asking the following questions:

- Does this website only publish low quality guest post (SEO-led) content from lots of different topics?

- Does this website still publish content? If not, ignore it.

- The Referring page is from a website that you see linking to a lot of EN Blog posts, through a RSS style automated linking system.

Additionally, all URLs labeled “Redirect (301)” required a new URL to be redirected to.

When choosing a new URL, we did our best to pick the most relevant and similar page. If we couldn’t find one, we redirected to the pillar page of the cluster that the post belonged to.

If for some reason, the URL didn’t belong to a cluster or there wasn’t a pillar page, we redirected it to the HubSpot Blog homepage.

Decision-making for some content types was easier than others. For example, we were able to automatically assign 301 redirects to URLs that were flagged for cannibalization during the initial audit. We also automatically assigned 404s to URLs with less than 10 backlinks labeled as Newsjacking and Business Updates.

Everything else was manually reviewed to ensure accuracy. To make the evaluation process easier, we followed this decision tree:

It took my team about two and a half weeks to ensure that every URL had the correct label. In the end, we had 1,675 URLs assigned to be redirected and 1,210 URLs assigned to be unpublished and archived.

Once each URL was evaluated, we were finally ready to take action.

After coordinating with Rory and Principle Technical SEO Strategist, Sylvain Charbit, we decided to prune the URLs in batches instead of all at once. That way, we could better monitor the impact of redirecting and archiving a large quantity of content.

Originally, we planned to implement our prune in five batches over five weeks, allowing us time to monitor performance during the weeks in between.

Batches one and two contained URLs meant to be archived and unpublished, and batches three through five contained URLs designated for 301 redirects.

Because there were so many URLs to unpublish and archive, we worked with developers on HubSpot’s Digital Experience team to create a script that would automatically unpublish and archive URLs and redirect them to our 404 page.

Then, we were able to implement the 301 redirects with the Bulk URL Redirect tool in Content Hub.

Note: Although we were able to work through this process internally and finish before our deadline, I want to acknowledge that manually evaluating over 2,000 URLs can be tedious and time-consuming.

Depending on your resources and the scope of your audit, you may want to consider hiring a freelancer to help your team work through a task this large.

Content Pruning Results

While we successfully implemented each batch, this process didn’t come without a few road bumps.

Midway through our pruning schedule, Google rolled out the March 2024 Core Algorithm Update. We ended up placing our pruning schedule on hold so we could better monitor performance during the update.

Once the update was complete, we resumed the rest of our prune until it was complete.

Because of the volatile search landscape in 2024, we didn’t see the traffic gains we’d hoped to see once the prune was complete. However, we did celebrate a massive win for overall content freshness on the blog.

At the start of our audit in 2023, we calculated the freshness of our content library by looking at each URL’s publish date and quantifying the number of days since they were updated.

For example, say the current date is November 12, 2024, and you have a post that was last updated on February 19, 2008. Based on the 2024 date, the post from 2008 is 16.7 years old or 6,110 days.

Once we had all of the ages for every post on the HubSpot Blog, we averaged those numbers to determine the average age of our content library, which was 2,088 days (5.7 years).

Since pruning 2,888 URLs (and updating hundreds of URLs from the audit and beyond), the HubSpot Blog’s average age has dropped to 1,747 days — that’s 341 days younger than when we started.

As content freshness and helpfulness play an even greater role in search algorithms, being nearly a year younger can make a big difference.

What’s Next?

Earlier in this post, I mentioned that this audit is only one of three that my team has worked on in 2024.

Our phase two audit focuses on the lowest-performing posts that were not included in phase one, totaling over 6000 URLs. Then, phase three assesses the value of our Blog’s topic clusters.

We’re still taking action on the results from these audits, but I’m so excited to share the process and insights when they’re complete.

Ultimately, content auditing is a job that is never truly done — especially when working with large libraries. You finish one audit, then it’s on to the next.

Although the work can be tedious, the rewards of improving content quality, user experience, and performance make it worth the effort.

Comment here